RCNN

last modified : 13-06-2019

General Information

- Title: Rich feature hierarchies for accurate object detection and semantic segmentation

- Authors: Ross Girshick, Jeff Donahue, Trevor Darrell, Jitendra Malik

- Link: article

- Date of first submission: 11 November 2013

- Implementations:

Brief

This network is one of the pioneers for object detection. In its conception it is tightly linked to the OverFeat network, as described in the article : "OverFeat can be seen (roughly) as a special case of R-CNN.".

Even if the architecture of the network is inspired by OverFeat, the RCNN outperformed all of the results at the time of its publication.

One of the main contribution of the paper is to demonstrate the gain obtained when pre-training on large auxiliary dataset and then training on the target set.

This is not an end-to-end classifier.

How Does It Work

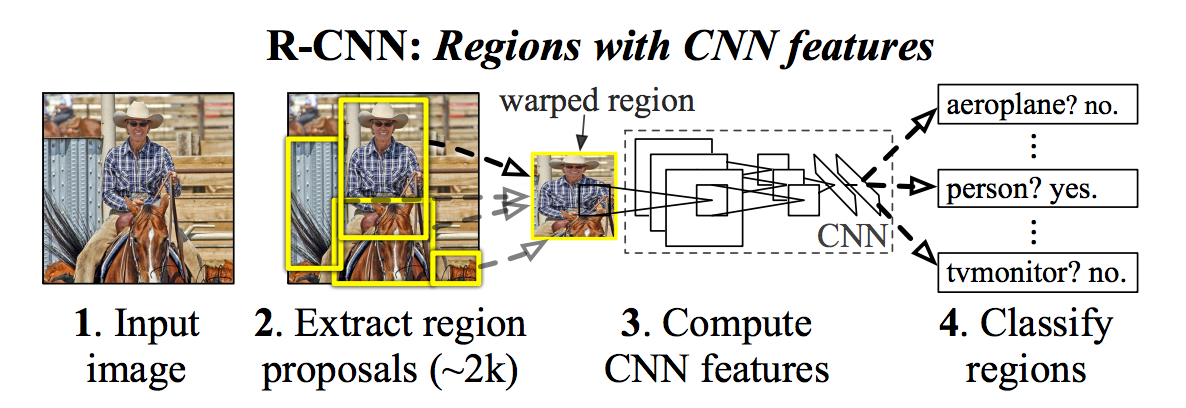

The network is made of three main parts, the region extractor, the feature extractor and finally the classifier. The whole network is shown in the following image:

A region proposal algorithm extract ROI, then each region is fed to a classifier and finally the extracted features are classified.

Results

Results for the PASCAL VOC 2010 challenge :

| Model | mAP |

|---|---|

| RCNN | 53.7 |

| SegDPM | 40.4 |

| Regionlets | 39.7 |

| UVA | 35.1 |

| DPM v5 | 33.4 |

Results for the ILSVRC 2013 challenge :

| Model | mAP |

|---|---|

| RCNN | 31.4 |

| OverFeat | 24.3 |

In Depth

At test time

The first part of the network uses the selective search algorithm to generate around 2k boxes of possible objects.

Then, second part of the network uses the network from Krizhevsky et al. to generate a 4096-dimensional feature vector from each boxes that were proposed. The input image is a 227x227 mean-subtracted wrapped RGB image.

Finally the correct class is extracted using a SVM and Non-Maximum suppression from all the boxes.

At training time

The CNN is trained with the fully connected layers at the end modified to match the number of classes in the dataset.

The network is first pre-trained "on a large auxiliary dataset (ILSVRC2012 classification) using image-level annotations only", with all the classes. Then the network is fine tuned by replacing the fully connected layer with a smaller one to match the number of class.

Finally, once the CNN part has converged, the SVMs are trained.